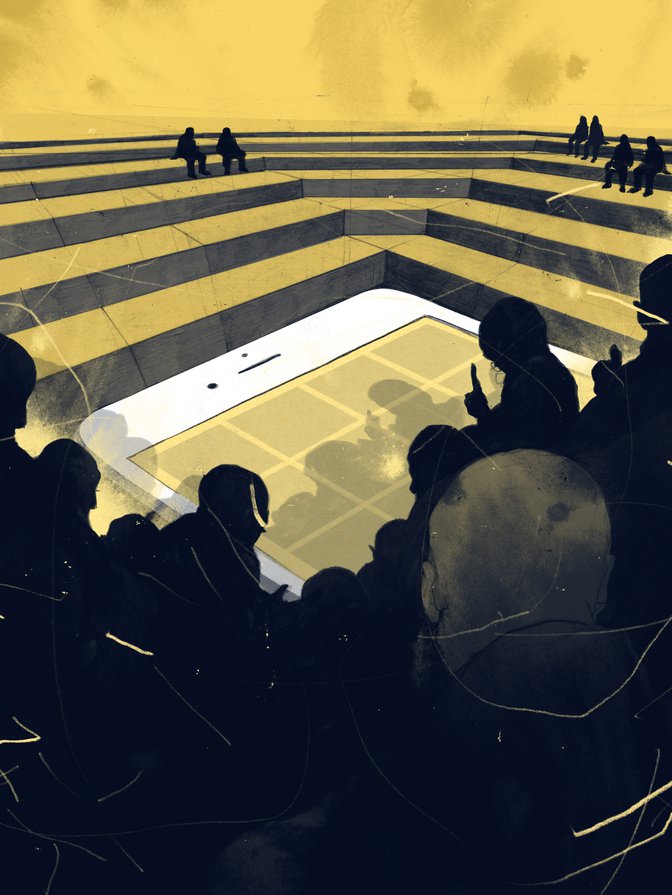

n May 2019, shortly before the International Grand Committee on Big Data, Privacy and Democracy met in Ottawa, a doctored video of US Democratic Speaker of the House Nancy Pelosi went viral. The video had slowed Pelosi’s words, making her appear intoxicated. Later, the Facebook representatives testifying before the committee, Neil Potts and Kevin Chan, were asked about their company’s policies on altered material (House of Commons 2019). They responded that Facebook would not remove the Pelosi video, but would label it to ensure that users knew it was doctored. (Journalists later found postings of the video without the label.) After further probing from British MP Damian Collins, Potts clarified that Facebook would remove pages masquerading as a politician’s official page. Altered videos could stay; pages impersonating a politician could not.

Questioning of social media representatives continued, in a marathon session lasting three hours. Eventually, the floor was handed to the Speaker of the House of Assembly from St. Lucia, MP Andy Daniel. He had found Facebook’s answers intriguing: for some time, the prime minister of St. Lucia had been trying to get Facebook to remove a page impersonating him, but Facebook had not responded. At the hearing, Potts and Chan promised to look into the case; it is not clear if they did.

The episode illustrates many of the problems with our current content moderation landscape. First, social media companies’ processes are often opaque, their rules arbitrary and their decisions lacking in due process.

Second, companies have global terms of service, but do they enforce them evenly around the world? Or do they pay more attention to larger markets such as the United States and Germany, where they are worried about potential legislative action?

Third, the episode showed a lack of accountability from top employees at major social media companies. Mark Zuckerberg has testified before the US Congress and the European Parliament. Zuckerberg even returned to Congress in September 2019 to meet with senators and US President Donald Trump (Lima 2019). But he seems determined not to testify anywhere else. Between testimonies to Congress, Zuckerberg has declined to testify in the United Kingdom and Canada, despite subpoenas.

Removing a page impersonating a politician is fairly straightforward, yet this simple question raised a host of complicated issues. It also showed that one hearing barely scratched the surface. Clearly, we need institutions to provide regular, consistent meetings to answer pressing questions, discuss content moderation standards and push for further transparency from social media companies.

This essay examines one idea to improve platform governance — social media councils. These councils would be a new type of institution, a forum to bring together platforms and civil society, potentially with government oversight. Social media councils would not solve the structural problems in the platforms’ business models, but they could offer an interim solution, an immediate way to start addressing the pressing problems in content moderation we face now.

The Central Challenge of Content Moderation

It has been more than a decade since internet companies cooperated with human rights and civil-liberties organizations to create the Global Network Initiative (GNI), a set of voluntary standards and principles to protect free expression and privacy around the world. Since the GNI’s creation in 2008, questions have only grown more urgent about how to coordinate standards and whether voluntary self-regulation suffices. The discussion has also shifted to see content moderation as one of the central challenges for social media. Content moderation has become a key area of regulatory contestation, as well as concern about how to ensure freedom of expression.

Content moderation is already subject to various forms of regulation, whether the Network Enforcement Law (NetzDG) in Germany (Tworek 2019a), self-regulatory company-specific models, such as the oversight board under development at Facebook, or subject-specific voluntary bodies such as the Global Internet Forum to Counter Terrorism (GIFCT).

The Facebook model is a novel example of independent oversight for content moderation decisions. In 2018, Mark Zuckerberg announced that he wanted to create “an independent appeal [body]” that would function “almost like a Supreme Court” (Klein 2018). After consultations in many countries, Facebook announced in mid-September 2019 the charter and governance structure of their independent oversight board (Facebook 2019a). A trust will operate the board to create independence from the company. It also seems possible that Facebook may open up the board to other companies in the future.

Social media companies’ processes are often opaque, their rules arbitrary and their decisions lacking in due process.

Some legal scholars, Evelyn Douek (2019) for example, have praised Facebook’s plans as a step in the right direction, because the board will at least externalize some decisions about speech. Yet, the board foresees having only 40 members, who will have to deal with cases from around the world. It is inevitable that much context will be lost. We do not know which cases will be chosen or how quickly decisions will be made. Decisions that take a long time to make — as they do at the Supreme Court — could be made too late for the Rohingya whose violent expulsion from Myanmar was accelerated through hate-filled Facebook posts. Facebook plans to have their board running by early 2020. Even if it were to function seamlessly, questions remain about whether models based on one company can work or if there should be more industry-wide supervision.

Unlike the single-company model of Facebook’s oversight board, the GIFCT enables coordination between major social media companies (Facebook, Twitter, YouTube and Microsoft) who want to remove terrorist content. Since its emergence in summer 2017, the GIFCT has facilitated a form of industry-wide cooperation, but without public oversight. The GIFCT published its first transparency report in July 2019 (GIFCT 2019). Among other things, the GIFCT houses a “common industry database of ‘hashes’ — unique digital fingerprints — for violent terrorist imagery or terrorist recruitment videos or images that the member companies have removed from their services” (Heller 2019, 2), although it is unclear exactly how the GIFCT defines terrorism. For now, the GIFCT remains mostly a mystery to those outside the companies or specific civil society organizations and governments who cooperate with the forum. To give a few examples, we do not even know where the database is housed. The GIFCT has no provision for third-party researcher access. We do not know if additions to the database by one company are ever disputed by another or if there are even mechanisms to resolve such a dispute.

A More Inclusive Solution

Social media councils would take a different approach than the GIFCT or Facebook’s oversight board. They would be multi-stakeholder fora, convened regularly to address content moderation and other challenges. Civil society organizations would participate from the start. Ironically, although social media promised to connect everyone, platforms’ users have far too often been excluded from regulatory conversations. The Christchurch Call, for example, was initially only signed by companies and governments.1

Social media councils would include representatives from marginalized groups and civil society organizations across the political spectrum to ensure that any solutions incorporate the experiences and suggestions of those people often most affected by content moderation decisions. The general idea is supported by others, including the UN Special Rapporteur on the Right to Freedom of Opinion and Expression (Global Digital Policy Incubator, ARTICLE 19 and David Kaye 2019). By mandating regular meetings and information-sharing, social media councils could become robust institutions to address problems that we have not yet even imagined.

The exact format and geographical scope of social media councils remain up for debate. One free speech organization, ARTICLE 19, currently has an open consultation about social media councils; the consultation will close2 on November 30, 2019. The survey includes questions about councils’ geographical scope (international or national) and the remit (whether the council would review individual cases or decide general principles).

If social media councils do emerge, there are ways of combining the national and the international. A national social media council might operate on the basis of an international human rights framework, for example. National social media councils could be set up simultaneously and designed to coordinate with each other. Or, an international council could exist with smaller regional or national chapters. If these councils address content, it is worth remembering that international cooperation will be difficult to achieve (Tworek 2019b).

The regulatory structure of social media councils is another foundational question. Governance need not always involve government intervention. Broadly speaking, there are four different models of regulation with different levels of government involvement, as explained in a paper on classifying media content from the Australian Law Reform Commission (2011, 17):

- Self-regulation: Organizations or individuals within an industry establish codes of conduct or voluntary standards. Self-regulation may be spurred by internal decisions within the industry, public pressure for change or concern about imminent government action. Self-regulating associations are generally funded by membership fees. The industry itself enforces rules and imposes punishments for violations.

- Quasi-regulation: Governments push businesses to convene and establish industry-wide rules and compliance mechanisms. Governments do not, however, determine the nature of those rules nor their enforcement.

- Co-regulation: An industry creates and administers its own rules and standards. However, governments establish legislation for enforcing those arrangements. Broadcasting is a classic example. The online advertising industry in Israel attempted to self-regulate and failed; although the industry wished to self-regulate, the public wanted to see co-regulation (Ginosar 2014).

- Government or statutory regulation: Governments create legislation for an industry and implement a framework and its rules. Governments may also implement international regulation on a national level. Enforcement mechanisms may include councils or arm’s-length regulatory bodies, such as data protection authorities.

Some regulatory forms can be hybrid and incorporate elements from several of these categories. There could also be differentiated membership and expectations of council members, based upon the size of the social media company, with higher expectations placed on the major players.

Social media councils could fulfill a wide range of functions, including all or some of those listed here:

- Give a voice to those who struggle to gain attention from social media companies. Social media have enabled underrepresented voices to be heard, but those voices are often left out of conversations about how to improve social media. Social media council meetings could be a place to hear from those who have been abused or affected by online harassment. Or, it could be a place to hear from those who have had difficulties appealing against takedowns.

- Provide a regular forum to discuss standards and share best practices. These discussions need not standardize content moderation between companies. Different content moderation practices are one way of providing users with choices about where they would like to interact socially online. But sharing best practices could help companies to learn from each other. Might Twitter and Facebook have learned something about how to combat anti-vaxxer disinformation from Pinterest, for example (Wilson 2019)?

- Create documented appeals processes.

- Create codes of conduct, avoiding the issues that have plagued the European Union’s Code for Countering Illegal Hate Speech Online, when civil society organizations walked out over frustration at the process (Bukovska 2019).

- Establish frameworks to enable access to third-party researchers, including protocols to protect users’ privacy and companies’ proprietary information.

- Develop standards and share best practices around transparency and accountability, especially in the use of artificial intelligence (AI) and algorithms to detect “problematic” speech.

- Establish industry-wide ethics boards on issues such as AI. This might avoid public relations debacles such as the short-lived Google AI Ethics Board (Piper 2019).

- Discuss labour issues around content moderators.

- Address issues of jurisdiction and international standards.

- Establish fines and other powers for government or other enforcement agencies to support the council’s authority and decisions.

A social media council may be more or less proactive in different places. That could depend upon a country or region’s own cultural policies. Canada, for example, might like to ensure the council examines the implications of the Multiculturalism Act for content moderation (Tenove, Tworek and McKelvey 2018).

Regardless of scope, there should be coordination on international principles for social media councils. International human rights standards and emerging international best practices would provide a helpful baseline and smooth coordination among countries and social media companies. One source of standards is the “Santa Clara Principles” on content moderation.3 A more established source is international human rights law.

In 2018, the UN Special Rapporteur on the Promotion and Protection of the Right to Freedom of Opinion and Expression, David Kaye, suggested a framework for applying international human rights to content moderation. “A human rights framework enables forceful normative responses against undue State restrictions,” he proposed, so long as companies “establish principles of due diligence, transparency, accountability and remediation that limit platform interference with human rights” (UN General Assembly 2018, para. 42). Social media councils can push companies to establish those principles.

They could put the societal back into social media.

When companies try to have global terms of service, international human rights law on speech makes sense as a starting point. Facebook’s charter for its new oversight board now explicitly mentions human rights (Facebook 2019b). One clear basis in international speech law is Article 19 of the International Covenant on Civil and Political Rights (ICCPR) (UN General Assembly 1966). Created in 1966, the ICCPR was ratified slowly by some countries and entered into force in 1976. The United States only ratified the Covenant in 1992 (although with some reservations).

Although individual countries differ in their approaches to speech law, international covenants such as the ICCPR provide common ground for transatlantic and transnational cooperation on freedom of expression (Heller and van Hoboken 2019). The UN Human Rights Committee functions as an oversight body for the ICCPR.

A Possible Path Forward

Creating an industry-wide regulatory body is “easier said than done” (Ash, Gorwa and Metaxa 2019). There are many obvious questions. What is “the industry”? What would be the geographical scope of such a body? How could it be structured to balance national, regional and international concerns? How should it ensure freedom of expression and enshrine compliance with international human rights law, while allowing relevant services to operate in different jurisdictions with different legal standards? How could social media councils fit into dispute resolution mechanisms? There are multiple civil society organizations such as ARTICLE 19 and Stanford’s Global Digital Policy Incubator working on these questions. Many of them are represented on the High-Level Transatlantic Working Group on Content Moderation and Freedom of Expression Online, which will be exploring such dispute resolution mechanisms over the next few months and considering how transatlantic cooperation might function in this space.

Social media councils may not be a panacea, but they are one possible path forward. Further discussions will help to clarify the jurisdictional and institutional parameters of such a council. The specific set-up may differ for each state, depending upon its communications policies and extant institutions. There are also questions about how to coordinate councils internationally or whether to create cross-border councils with participation from willing countries, such as those participating in the International Grand Committee on Big Data, Privacy and Democracy.

There are issues that councils do not resolve, such as tax policy or competition. Still, they offer a potential solution to many urgent problems. They could put the societal back into social media. They could establish fair, reliable, transparent and non-arbitrary standards for content moderation. At a time when decisions by social media companies increasingly structure our speech, councils could offer a comparatively swift method to coordinate and address pressing problems of democratic accountability. Creating a democratic, equitable and accountable system of platform governance will take time. Councils can be part of the solution.