n May 2017, a few months before the Kenyan general election, the Star newspaper in Nairobi reported that British data analytics and public relations firm Cambridge Analytica was working with the ruling Jubilee coalition (Keter 2017). Then, days before Kenyans went to the polls, global privacy protection charity Privacy International told the BBC that the company had been paid $6 million in the contract (Bright 2017). Stories had long been simmering about Cambridge Analytica’s association with illicit data harvesting, and it later emerged that the firm had been involved in the US presidential and Brexit campaigns. Why would a company associated with a US presidential election or a vote on the future of the European Union be involved in a general election in an African country?1

In fact, this wasn’t the first time the public relations firm had been working in the area of African politics. They had been active in South Africa and Nigeria, and according to the firm’s website at the time, they had worked in Kenya during the previous election runup, in 2013, building a profile of the country’s voters through a survey of more than 47,000 Kenyans and developing a campaign strategy “based on the electorate’s needs (jobs) and fears (tribal violence)” (cited in Nyabola 2018, 160). Cambridge Analytica’s research identified the issues that resonated most with each constituent group, measuring their levels of trust, how they voted and how they digested political information; on the basis of this data, Cambridge Analytica recommended that the campaign focus on the youth as a segment that “could be highly influential if mobilised” (ibid., 161). Accordingly, they concentrated on developing an online social media campaign for the Jubilee presidential campaign to “generate a hugely active following” (ibid.).

🔊 @Nanjala1: "It's one thing that you're able to use an algorithm to encourage people to buy a pair of jeans, it's another thing altogether if you're using an algorithm to shape how people receive their political realities." pic.twitter.com/vk6lWET71g

— CIGI (@CIGIonline) November 1, 2019

In Kenya in 2013, politics was paid greater attention on the internet than it had been given in the past. Monitoring political conversation online amid broader efforts to curb hate speech demanded significant organization. In the aftermath of the post-election violence in 2007-2008, social media had been named in passing as playing a role; by 2013, as the power of these spaces had become more recognized by the public, fears grew that these platforms could be used to mobilize ethnic hatred and even worse violence. The non-profit technology company Ushahidi originated around the 2008 violence to “map reports of violence in Kenya after the post-election violence in 2008”2 and would eventually become one of Kenya’s digital success stories. In 2013, Ushahidi expanded its mandate to study patterns of hate speech online, launching an initiative called “Umati” — Swahili for crowd — to monitor social media channels for any kind of hate speech and alert authorities before it escalated. These developments, combined with the shift toward digital reporting of election results, suggest the scale of changes that made 2013 Kenya’s first digital election (Hopkins 2013).

Kenya’s experience with Cambridge Analytica and other data analytics firms raises the question of political speech on internet platforms, and specifically, what platform governance in relation to political speech could and should look like. For Kenya, the challenge was complex. First, the country had a history of violent political processes, and there was every reason to fear that the election in 2017 would also be violent if poorly managed. Second, the firms implicated in the analytics operations were all foreign-owned, which raises the spectre of foreign manipulation of democratic processes. Third, there is the question of capital and money, given that none of the platforms that were connected to this experience were Kenyan, nor were the implicated analytics firms. Should foreign companies be able to meddle in political processes where they have no skin in the game, and in particular, when they are doing so for profit?

And, in fact, Kenya did live with the consequences of the manipulation of political speech on the internet in the 2007, 2013 and 2017 elections. In 2007, social media was identified as one of the platforms — radio and text messages were also identified — on which were distributed hate speech and incitement to violence, fuelling the deadly 2007-2008 post-election violence that resulted in at least 1,500 deaths and much more displacement. In 2013, manipulation of political speech online was part of a broader campaign to shift public opinion away from demands for accountability for that violence and toward portraying the International Criminal Court as a tool of Western interference. Finally, in 2017, the distribution of hate speech on political platforms not only skewed political behaviour, but also contributed to one of the most virulent election campaigns in the country’s history.

Nor has Kenya been the most grievous example of this phenomenon. A disproportionate amount of time has been spent debating the outcomes of the Brexit vote and the 2016 US presidential elections, because these countries exert a large influence on the global political economy. Yet, the impact of these issues is hardest felt in poor countries, where authoritarianism is a constant threat and regulations on speech are somewhat more fluid. These factors may perhaps explain why even Facebook itself acknowledges that its entry into Myanmar was connected to hate speech that fuelled the genocide against the Rohingya (Warofka 2018), or why the platform is increasingly the preferred space for disseminating hate speech in local languages in countries like South Sudan (Reeves 2017).

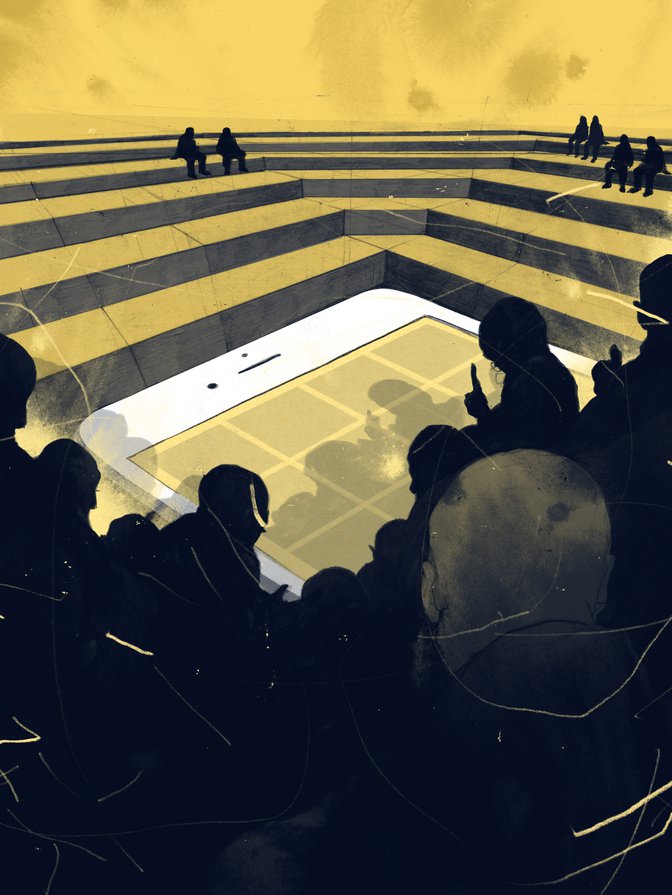

Evidently, manipulation of political messaging online will be one of the major platform governance challenges of the coming years, given the frequency at which this style of manipulation is happening around the world. In the analogue world, political speech has always been recognized as a special category of speech governed by a subset of general norms and regulations on media and speech. Most countries have tight restrictions on what political candidates can and cannot say in traditional media, and publications are required to distinguish between political advertorials and organic journalism. These lines are starkest in countries with a long journalism tradition where the absence of these demarcations has led to serious social and political issues. Germany, for example, has severe restrictions on how political speech can be represented in the media, precisely because the media was such a focal point for Nazi mobilization in the lead-up to World War II. Yet, only after the Cambridge Analytica scandal are we seeing more attention paid to the developing of similar regulations online.

The West is experiencing a fraction of what this looks like in the rest of the world.

At heart, the methods used to create and disseminate hate speech online are strikingly similar to those used offline — playing on people’s vaguest yet most potent fears; elevating the spectre of “The Other” as an existential threat to the dominant way of life or world view. These are all tricks that are as old as political speech itself. What is different is that internet platforms allow such political speech to be highly fragmented and targeted. The same ability to buy advertisements that allow you to sell jeans only to 18-to-30-year-olds living in Nairobi who are online between 7:00 and 9:00 p.m., as opposed to sending blanket advertisements to an unsegmented audience, is now being used for political speech. What this means is that the individual voters within a single state are not consuming the same political information; it is difficult to consider a public sphere truly representative when public discourse is reduced to highly inward-looking, fragmented groups talking past each other, rather than a true rational-critical exchange involving debate and deliberation.

At the same time, shifting so much of our political discourse online makes it hard to regulate. The West is experiencing a fraction of what this looks like in the rest of the world, where pockets of people are becoming increasingly radicalized on highly targeted or specialized websites. But in other parts of the world, the issue is compounded by the language problem. For example, in Ethiopia, even though the official language is Amharic, much of the content online is generated in one of the other hundreds of languages other than Amharic, because the internet makes it possible for people to do that in a way that a national newspaper or television station cannot. This is a double-edged sword — on the one hand, the internet has been great for creating space for the preservation of smaller languages, but on the other hand, this very characteristic makes it difficult to effectively moderate what people are saying online, and some of it is really nasty (Fick and Dave 2019).

Underpinning all this is the question of accountability — who should be held responsible when all of this falls apart? The case of Myanmar has starkly demonstrated that just because a platform is ready to penetrate a new market doesn’t mean it should. In 2018, the United Nations identified Facebook as a vector for hate speech calling for the genocide of the Rohingya.3 That report was widely circulated, and Facebook made some strong statements acknowledging its recommendations and pledging to work toward them. And then — nothing. As it stands, there is practically nothing that can be done to make sure that Facebook is held accountable — or even to define what that accountability would look like — because the main interested parties in that genocide were the government itself. So, what does platform regulation look like when the platform that is upending the political space is run elsewhere, governed by laws outside your jurisdiction, and ultimately answerable to shareholders in a country other than your own?

At this stage, it should be very clear that platform governance with regards to political speech should be an urgent priority. But the imperatives must still be balanced out by the need to preserve free speech and the characteristics of a digital commons that make these platforms such powerful spaces for political action. Nor can the goals of such governance be transferred wholesale to states without consideration of the nature of the states in question. In many of the countries given as examples in this essay, the main perpetrator or the main interested party in misusing platforms to disseminate hate speech is the state itself.

It’s a reminder that political speech is treated as a special class of speech on other platforms for a reason.

One idea toward this end would be enhancing multilateral governance, headed up by an international norm-setting organization that identifies principles for political speech online and works with regulatory agencies in states, to both promote the norms and develop standards for implementation. There are already a wide number of internet governance dialogues out there — can they be better coordinated into a harmonized system that builds on what exists for the regulation of political speech in the analogue domain? Such coordination would also enhance the conversation between those working in political analysis, who recognize this problem as a growing problem, and those working in technology, who might not see how connected this issue is to what has gone before.

Self-regulation is also an option. The first stage would be transparency, namely, that political actors would be forced to disclose their identities when disseminating political speech online. But transparency may also involve public monitoring and observation. Crucially, content moderation needs to be systematized and made more transparent, and a platform should not be allowed to operate in a market if it is not prepared to take full responsibility for the political consequences it might trigger. This means, for example, that platforms should not be allowed to operate in a country until full content moderation in all the major languages of that country is operational. Yes, it’s expensive and burdensome, but the alternative is Myanmar.

Ultimately, there is nothing truly new under the sun. Each iteration of media throws up the same uncertainties and challenges that have gone before and requires a renewed effort to address these complications for everyone’s benefit. History records the panic that surrounded the development of the printing press — the idea that common people would be able to access potentially explosive information sent the church, in particular, into a tailspin. The popularization of tracts inflamed political behaviour across Europe and led to a variety of unexpected outcomes, including, arguably, what would become the Protestant Reformation. Eventually, regulations were developed to control what could be said in these tracts, media ethics became normalized, and laws on libel and defamation were much more strictly enforced.

None of this undercuts the fact that the internet has changed the way political information is generated and travels, but it does put things into perspective. It’s a reminder that political speech is treated as a special class of speech on other platforms for a reason. It is a reminder that platform governance has been tricky before, but it was accomplished, even though it was not always timely. It is a reminder that while the challenge seems large and complex, it is not insurmountable.