ince its inception some 60 years ago (Anyoha 2017), artificial intelligence (AI) has evolved from an arcane academic field into a powerful driver of social transformation. AI and machine learning are now the basis for a wide range of mainstream commercial applications, including Web search (Metz 2016), medical diagnosis (Davis 2019), algorithmic trading (The Economist 2019), factory automation (Stoller 2019), ridesharing (Koetsier 2018) and, most recently, autonomous vehicles (Elezaj 2019). Deep learning — a form of machine learning — has dramatically improved pattern recognition, speech recognition and natural language processing (NLP). But AI is also the basis for a highly competitive geopolitical contest.

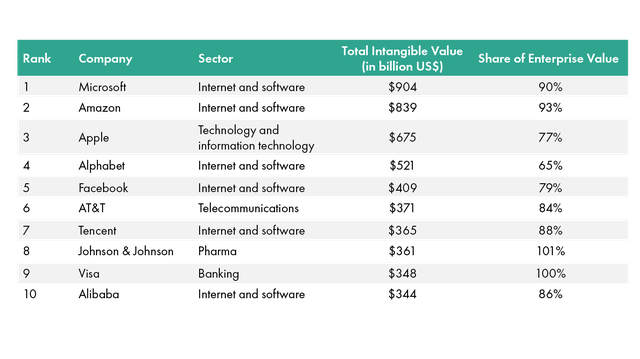

Much as mass electrification accelerated the rise of the United States1 and other advanced economies, so AI has begun reshaping the contours of the global order. Data-driven technologies are now the core infrastructure around which the global economy operates. Indeed, whereas intangible assets (patents, trademarks and copyrights) were only 16 percent of the S&P 500 in 1976, they comprise 90 percent today (Ocean Tomo 2020, 2). In fact, intangible assets for S&P 500 companies are worth a staggering US$21 trillion (Ross 2020; see also Table 1). In this new era, power is rooted in technological innovation. Together, renewable energy technologies (Araya 2019b), fifth-generation (5G) telecommunications (Araya 2019a), the Industrial Internet of Things (Wired Insider 2018) and, most importantly, AI are now the foundations for a new global order.

Table 1: Largest Companies by Intangible Value

At the research level, the United States remains the world’s leader in AI. The National Science Foundation (NSF) provides more than US$100 million each year in AI funding (NSF 2018). The Defense Advanced Research Projects Agency (DARPA) has recently promised US$2 billion in investments toward next-generation AI technologies (DARPA 2018). In fact, the United States leads in the development of semiconductors, and its universities train the world’s best AI talent. But, while the United States retains a significant research dominance, national leadership is weak and resources are uneven.

While the United States has established a strong lead in AI discovery, it is increasingly likely that China could dominate AI’s commercial application (Lee 2018). China has now emerged as an AI powerhouse (Simonite 2019), with advanced commercial capabilities in AI and machine learning (Lee 2020) and a coherent national strategy. Alongside China’s expanding expertise in factory machinery, electronics, infrastructure and renewable energy, Beijing has made AI a top priority (Allen 2019). What is obvious is that China has begun a long-term strategic shift around AI and other advanced technologies (McBride and Chatzky 2019).

Toward a New Global Order

Together, China and the United States are emerging as geopolitical anchors for a new global order (Anderson 2020). But what kind of global order? How should actors within the existing multilateral system anticipate risks going forward? Even as the current coronavirus disease 2019 (COVID-19) crisis engulfs a wide range of industries and institutions, technological change is now set to undermine what is left of America’s Bretton Woods system (Wallace 2020). To be sure, weaknesses in the current multilateral order threaten to deepen the world’s geopolitical tensions.

The truth is that a global system shift is already under way (Barfield 2018). As Rohinton P. Medhora and Taylor Owen (2020) have pointed out, data-driven technologies and the current pandemic are both manifestations of an increasingly unstable system. For almost five decades, the United States has guided the growth of an innovation-driven order (Raustiala 2017). But that order is coming to an end. Accelerated by the current health crisis, the world economy is now fragmenting (Pethokoukis 2020). Beyond the era of US hegemony, what we are increasingly seeing is a rising techno-nationalism that strategically leverages the network effects of technology to reshape a post-Bretton Woods order (Rajan 2018).

One could observe (as Vladimir Lenin purportedly did), that “there are decades where nothing happens, and there are weeks where decades happen.” Even as nation-states leverage data to compete for military and commercial advantage, disruptive technologies such as AI and machine learning are set to transform the nature and distribution of power. What seems clear is that we are entering an era of hybrid opportunities and challenges generated by the combination of AI and a cascading economic crisis. The spread of smart technologies across a range of industries suggests the need for rethinking the institutions that now govern us (Boughton 2019).

In order to manage rising tensions, a new and coordinated global governance framework for overseeing AI is needed. The absence of effective global governance in this new era means that we are facing significant turbulence ahead. Indeed, in the wake of COVID-19, the global economy could contract by as much as eight percent, moving millions of people into extreme poverty (World Bank 2020). Any new framework for multilateral governance will need to oversee a host of challenges overlapping trade, supply-chain reshoring, cyberwar, corporate monopoly, national sovereignty, economic stratification, data governance, personal privacy and so forth.

Global Governance in the AI Era

Perhaps the most challenging aspect of developing policy and regulatory regimes for AI is the difficulty in pinpointing what exactly these regimes are supposed to regulate. Unlike nuclear proliferation or genetically modified pathogens, AI is not a specific technology; it’s more akin to a collection of materials than a particular weapon. It is also an aspirational goal (Walch 2018), much like a philosopher’s stone that drives the magnum opus of computer science (the agent that unlocks the alchemy). To take only one example, Peter J. Denning and Ted G. Lewis (2019) classify the idea of “sentient” AI as largely “aspirational.”

The dream of the intelligent machine now propels computer science, and therefore regulatory systems, around the world. Together, research in AI and aspirational expectations around sentient machines are now driving fields as diverse as image analysis, automation, robotics, cognitive and behavioural sciences, operations research and decision making, language processing and the video gaming industry (The Verge 2019) — among many others. Regulating AI, therefore, is less about erecting non-proliferation regimes (a metaphor often used for managing AI; see, for example, Frantz 2018), and more about creating good design norms and principles that “balance design trade-offs not only among technical constraints but also among ethical constraints” (Ermer and VanderLeest 2002, 7.1253.1), across all sorts of products, services and infrastructure.

The dream of the intelligent machine now propels computer science, and therefore regulatory systems, around the world.

To explain this challenge with greater precision, we need to better appreciate the truism that “technology is often stuff that doesn’t work yet” and apply a version of this truism to the discourse on AI as “the stuff computers still can’t do.” In 1961, the development of a computer “spelling checker” (today a ubiquitous application) fell to the inventors at the Stanford Artificial Intelligence Laboratory (Earnest n.d.), like AI. Today, it is “just” a software feature. Twenty years ago, a car accelerating, braking and parking itself would have been considered AI; today, those functions are “just” assisted driving. Google is a search engine and Uber a ride-sharing app, but few of the billions of users of these Web platforms would consider them AI.

Simply put, once a service or a product successfully integrates AI research into its value proposition, that technology becomes a part of the functionality of a system or service. Meanwhile, the application of this “weak” or “narrow” AI proliferates across multiple research disciplines in the diffusion of an expanding horizon of tools and technologies. These applications of AI are everywhere and, in becoming everyday “stuff that works,” simply “disappear” into the furniture and functionality of the systems and services they augment. To take one example, a chair with adaptive ergonomics based on machine learning is just a fancy chair, not AI. For this reason, it would be very difficult to build general-purpose regulatory regimes that anticipate every case of AI and machine learning. The innovation costs alone of trying to do so would be staggering.

Toward Mundane AI Regulation

The challenges in regulating AI are, then, twofold: On the one hand, if we understand AI as a series of technological practices that replicate human activities, then there is simply no single field to regulate. Instead, AI governance overlaps almost every kind of product or service that uses computation to perform a task. On the other hand, if we understand AI and dedicated AI laboratories as the basis for dramatically altering the balance of power among peoples and nations, then we have terrific challenges ahead. Beyond the exaggerations of AI often seen in science fiction, it is clearly important to develop the appropriate checks and balances to limit the concentration of power that AI technologies can generate.

Instead of the mostly binary nuclear non-proliferation lens often used to discuss AI governance, inspiration for a more relevant (albeit less exciting) model of regulation can be found in food regulation (specifically, food safety) and material standards. Much like the products and processes falling within these two regulatory domains, AI technologies are designed not as final entities, but as ingredients or components to be used within a wide range of products, services and systems. AI algorithms, for example, serve as “ingredients” in the combinatorial technologies. These technologies include search engines (algorithmic ranking), military drones (robotics and decision making) and cybersecurity software (algorithmic optimization). But they also include mundane industries such as children’s toys (for semantic analysis, visual analysis and robotics2) and social media networks (for trend analysis and predictive analytics; see, for example, Rangaiah 2020).

The need for AI regulation has opened a Pandora’s box of challenges that cannot be closed. And should not be — just as the quest for the unattainable philosopher’s stone created some of the most important foundational knowledge in modern chemistry (Hudson 1992), so the search for “strong AI” has produced some of the core ingredients used to develop the most exciting, profitable and powerful modern technologies that now exist. In this sense, AI technologies behave less like nuclear technologies and more like aspartame or polyethylene.

Fortunately, the mature regulatory regimes overseeing food safety and material standards have already produced a series of norms that can inspire the ways in which global AI regulation might work. Instead of trying to regulate the function or final shape of an AI-enabled technology, the object of regulation should instead focus on AI as an “ingredient” or component of technological proliferation. This approach will be particularly important in preserving innovation capacity while providing appropriate checks and balances on the proliferation of AI-driven technologies.

Envisioning Smart AI Governance

Notwithstanding the mundane aspects of AI governance, very real challenges lie ahead. We are living through a period of transition between two epochs: an industrial era characterized by predictable factory labour, and a new digital era characterized by widespread institutional unravelling. In this new century, the United States remains a formidable power, but its days of unipolar hegemony have come to an end. The hard reality is that technology is disrupting the geopolitical and regulatory landscape, driving the need for new protocols and new regulatory regimes.

Without a doubt, the most complex governance challenges surrounding AI today involve defence and security. From killer swarms of drones (Future of Life Institute 2017) to the computer-assisted enhancement of the military decision-making process (Branch 2018), AI technologies will force multiply the capacity of nation-states to project power. While the temptation to use the non-proliferation lens for any other kind of AI technology (for example, ban all killer robots!), the dual-use challenge remains the same. A killer robot is not a specific kind of technology. It is, instead, the result of the recombination of AI “ingredients,” many of which are also used to, for example, detect cancers or increase driver safety.

Over and above the current COVID-19 crisis, data-driven technologies are provoking a vast geotechnological restructuring (Khanna 2014). In this new environment, AI and machine learning are set to reshape the rules of the game. As Google’s Sundar Pichai (2020) wrote early this year in an op-ed for the Financial Times, the time for properly regulating AI technologies is now. As in the postwar era, what we need is a new kind of multilateral system to oversee a highly technological civilization. Sadly, much of the existing governance architecture lacks the capacity to address the needs of a data-driven economy. Nonetheless, most governments are already beginning to explore new regulations, even as approaches vary.

Given the scale of the changes ahead, we will need to consider the appropriate regimes for regulating AI. Fortunately, this does not mean starting from scratch. Even as regulatory compliance issues around AI proliferate, many existing regulatory systems and frameworks will remain invaluable. Indeed, even as the final forms of many AI technologies differ, the underlying ingredients are shared. And just as consumer protection laws hold manufacturers, suppliers and retailers accountable, so the plethora of AI-driven products and services can be similarly regulated. Nonetheless, looking beyond the mundane regulation of AI, many big challenges remain. Solving these challenges will mean rethinking a waning multilateral order.