Key Points

- No nation alone can regulate artificial intelligence (AI) because it is built on cross-border data flows.

- Countries are just beginning to figure out how best to use and to protect various types of data that are used in AI, whether proprietary, personal, public or metadata.

- Countries could alter comparative advantage in data through various approaches to regulating data — for example, requiring companies to pay for personal data.

- Canada should carefully monitor and integrate its domestic regulatory and trade strategies related to data utilized in AI.

any of the world’s leaders are focused on the opportunities presented by AI — the machines, systems or applications that can perform tasks that, until recently, could only be performed by a human. In September 2017, Russian President Vladimir Putin told Russian schoolchildren, “Whoever becomes the leader in this sphere will become the ruler of the world (Putin quoted in RT.com 2017). Many countries, including Canada, China, the United States and EU member states, are competing to both lead the development of AI and dominate markets for AI.1

Canadian Prime Minister Justin Trudeau had a different take on AI. Like Putin, he wants his country to play a leading role. At a 2017 event, he noted that Canada has often led in machine learning breakthroughs and stressed that his government would use generous funding and open-minded immigration policies to ensure that Canada remains a global epicentre of AI (Knight 2017). However, Trudeau had some caveats. While AI’s uses are “really, really exciting…it’s also leading us to places where maybe the computer can’t justify the decision (Trudeau quoted in Knight 2017). He posited that Canadian culture might offer the right guidance for the technology’s development: “I’m glad we’re having the discussion about AI here in a country where we have a charter of rights and freedoms; where we have a decent moral and ethical frame to think about these issues” (ibid.).

Canada alone cannot determine how AI is used because many applications and devices powered by AI depend on cross-border data flows to train them. In short, AI is a trade policy issue. The choices that nations make in governing AI will have huge implications for the digital economy, human rights and their nation’s future economic growth.

AI and Cross-border Data Flows

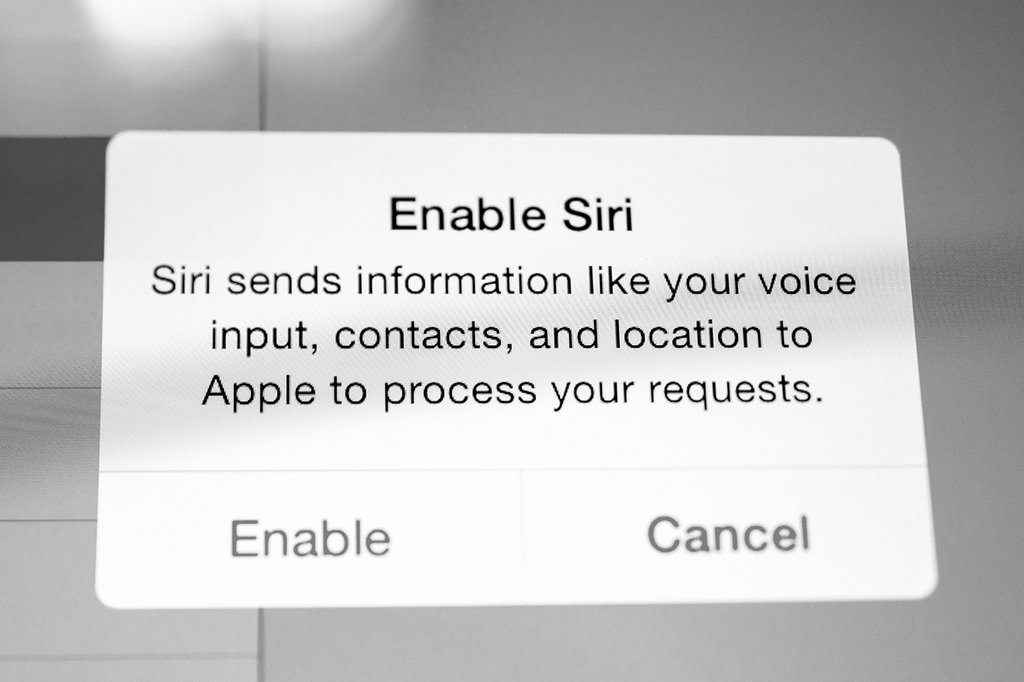

Every day, large amounts of data flows course through the internet, over borders and between individuals, firms and governments to power the internet and associated technologies. A growing portion of these data flows are used to fuel AI applications such as Siri, Waze and Google searches. Because many of these data flows are directly or indirectly associated with a commercial transaction, they are essentially traded. AI applications, “which use computational analysis of data to uncover patterns and draw inferences, depend on machine learning technologies that must ingest huge volumes of data, most often from a wide variety of sources” (BSA 2017). For example, when you ask a language translation app to translate where to find the best pommes frites in Paris, the app will rely on many other search queries from other apps, databases and additional sources of content. In another example, if you ask Watson, IBM’s AI-powered super computer2 to diagnose rare forms of cancer, it must first sift through some 20 million cancer research papers and draw meaningful conclusions by connecting various large data sets across multiple countries (Galeon and Houser 2016).

Canada alone cannot determine how AI is used because many applications and devices powered by AI depend on cross-border data flows to train them.

Not surprisingly, the average netizen is increasingly dependent on AI. A Northeastern University Gallup Poll survey of 3,297 US adults in 2017 found that 85 percent of Americans use at least one of six products with AI elements, such as navigation apps, music streaming services, digital personal assistants, ride-sharing aps, intelligent home personal assistants and smart home devices (Reinhart 2018). Some 79 percent of those polled said that AI has had a very or mostly positive impact on their lives so far (ibid.). However, most users probably do not know that trade agreements govern AI. Other polls reveal that if they did, they might call for stronger privacy requirements, better disclosure and a fuller national debate about how firms use algorithms and publicly generated data (CIGI-Ipsos 2017).

The public needs such information to assess if these algorithms are being used unethically, used in a discriminatory manner (to favour certain types of people) or used to manipulate people — as was the case in recent elections (Hern 2017). Policy makers also need to better understand how companies and researchers use proprietary data, personal data, metadata (allegedly anonymized personal data) and public data to fuel AI so that they can develop effective regulation.

The Current State of Trade Rules Governing AI

Although the World Trade Organization (WTO) says nothing about data, data flows related to AI are governed by WTO rules drafted before the invention of the internet. Because this language was originally drafted to govern software and telecommunications services, it is implicit and out of date. Today, trade policy makers in Europe and North America are working to link AI to trade with explicit language in bilateral and regional trade agreements. They hope this union will yield three outputs: the free flow of information across borders to facilitate AI; access to large markets to help train AI systems; and the ability to limit cross-border data flows to protect citizens from potential harm consistent with the exceptions delineated under the General Agreement on Trade in Services. These exceptions allow policy makers to breach the rules governing trade in cross-border data to protect public health, public morals, privacy, national security or intellectual property, if such restrictions are necessary and proportionate and do not discriminate among WTO member states (Goldsmith and Wu 2006).

As of December 2017, only one international trade agreement, the Comprehensive and Progressive Agreement for Trans-Pacific Partnership (CPTPP), formerly the Trans-Pacific Partnership (TPP), includes explicit and binding language to govern the cross-border data flows that fuel AI. Specifically, the CPTPP (which is still being negotiated) includes provisions that make the free flow of data a default, requires that nations establish rules to protect the privacy of individuals and firms providing data (a privacy floor), bans data localization (requirements that data be produced or stored in local servers) and bans all parties from requiring firms to disclose source code. These rules reflect a shared view among the 11 parties: nations should not be allowed to demand proprietary information when facilitating cross-border data flows.3

The United States (which withdrew from the TPP) wants even more explicit language related to AI as it works with Mexico and Canada to renegotiate NAFTA.

The United States (which withdrew from the TPP) wants even more explicit language related to AI as it works with Mexico and Canada to renegotiate the North American Free Trade Agreement (NAFTA). The United States has proposed language that bans mandated disclosure of algorithms as well as source code (Office of the United States Trade Representative 2017). The United States wants to ensure that its firms will not be required to divulge their source code or algorithms even if the other NAFTA parties require such transparency to prevent firms from using such algorithms in a discriminatory manner, to spread disinformation or in ways that could undermine their citizens’ ability to make decisions regarding their personal information (autonomy). Hence, the United States is using trade rules to “protect” its comparative advantage in AI.

Like most trade agreements, the CPTPP and NAFTA also include exceptions, where governments can breach the rules delineated in these agreements to achieve legitimate domestic policy objectives. These objectives include rules to protect public morals, public order, public health, public safety and privacy related to data processing and dissemination. However, governments can only take advantage of the exceptions if they are necessary, performed in the least trade-distorting manner possible and do not impose restrictions on the transfer of information greater than what is needed to achieve that government’s objectives. Policy makers will need greater clarity about how and when they can take these steps to protect their citizens against misuse of algorithms.

AI Strategies, Domestic Regulation and Trade

Some states and regions are developing very clear and deliberate policies to advance AI both within and beyond their borders. China’s free trade agreements do not contain binding rules on data flows or language on algorithms. But the country uses the lure of its large population, relatively low and poorly enforced privacy regulations, and subsidies to encourage foreign companies to carry out AI research in China. At the same time, the United States seems to be using trade agreements to build beyond its 318 million people to achieve economies of scale and scope in data (Aaronson and LeBlond, forthcoming 2018).

However, the European Union seems to be taking the most balanced approach, recognizing that it cannot encourage AI without maintaining online trust among netizens that their personal data will be protected. The 28 (soon to be 27) member states of the European Union agreed4 to create a digital single market as a key part of their customs union.5 The European Commission also launched a public consultation and dialogue with stakeholders to better understand public concerns about the use of data.6 In 2016, the European Union adopted the General Data Protection Regulation (GDPR), which replaces the Data Protection Directive. The GDPR takes effect on May 25, 2018 and provides rules on the use of data that can be attributed to a person or persons.7 In October 2017, the European Commission proposed a new regulation “concerning the respect for private life and the protection of personal data in electronic communications” to replace the outdated e-Privacy Directive (European Commission 2017b).

The GDPR has important ramifications for companies that use AI. First, the regulation applies to all companies that are holding or processing data from EU citizens whether or not they are domiciled in the European Union. Second, it gives citizens the ability to challenge the use of algorithms in two ways. Article 21 allows anyone the right to opt out of ads tailored by algorithms. Article 22 of the GDPR allows citizens to contest legal or similarly significant decisions made by algorithms and to appeal for human intervention. Third, it uses disincentives to secure compliance. Companies that are found to violate the regulation will be “subject to a penalty up to 20 million euro or 4% of their global revenue, whichever is higher” (Wu 2017).

Analysts are speculating regarding the costs and benefits of this mixed approach of incentives to AI coupled with strong rules on data protection. Some analysts believe that firms may struggle to inform netizens as to why they used specific data sets, or to explain how a particular algorithm yielded x result (Jánošík 2017). Others contend that the regulation may not be as onerous as it seems; in fact, the regulation really states that people need to be informed on the use of algorithms, rather than specifically requiring that the use be clearly explained to the average citizen (Wachter, Mittelstadt and Floridi 2017). Still others find this strategy will have multiple negative spillovers: raising the cost of AI, reducing AI accuracy, damaging AI systems, constraining AI innovation and increasing regulatory risk. Nick Wallace and Daniel Castro (2018) noted that most firms do not understand the regulation or their responsibilities. In short, the regulation designed to build AI could undermine the European Union’s ability to use and innovate with AI.

Implications for Smaller and Developing Countries, including Canada

Countries are just beginning to figure out how best to use and to protect various types of data that could be used in AI, whether proprietary, personal, public or metadata. Most countries, especially developing countries, do not have significant expertise in AI. These states may be suppliers of personal data, but they do not control or process data. But policy makers and citizens, like those in industrialized countries, can take several steps to control data and extract rents from their personal data (Porter 2018).

These states may decide to shape their own markets by developing rules that require companies to pay them for data (Lanier 2013). Developing countries with large populations are likely to have the most leverage to adopt regulations that require firms to pay rents for their citizens’ data. In so doing, they may be able to influence comparative advantage in the data-driven economy.

Meanwhile, Canada will need to better integrate its trade and AI strategies. Canada has comparative advantage in AI, but its companies and researchers will need larger amounts of data than its 38 million people can provide (Aaronson 2017). Canada will need to use trade agreements to foster the data pools that underpin AI, while reassuring citizens that their personal data (whether anonymized or not) is protected. NAFTA renegotiations — assuming they are not undermined by US President Donald Trump — provide an opportunity to begin a different discussion in North America on AI. Canada’s AI sector is closely integrated with that of the United States; both nations need to encourage the data flows that power AI while simultaneously protecting citizens from misuse or unethical use of algorithms. A forthcoming CIGI paper will discuss how Canada might create a new approach to data-driven trade, regulating data not just by the type of service, but instead by the variant of data.