Platforms have a politics of their own, where corporations are struggling with calls to better address the spread of hate speech, disinformation, networked harassment and conspiracy theories. Researchers, journalists and activists are pushing for changes to mitigate the harm of dangerous and inflammatory content that violates human rights standards, incites direct violence and inspires hate crimes around the world. Data-gathering operations (such as executed by Cambridge Analytica) and the influence of foreign operations continue unabated, despite the public scandal. As we close out 2019, all major US social media platforms are bracing for the onslaught that will be the 2020 election season and coming to terms with how seriously unprepared they really are.

Google recently announced new advertisement policies that are intended to go into effect before the 2020 US elections. New limitations on ad targeting (specifically, the inability to target based on political affiliation and audience reach) are intended to prevent a flood of disinformation and any attempts to suppress voters in marginalized groups. These changes to corporate policy come on the heels of Facebook’s announcement that they would not fact-check any political ads.

Democratic political candidate Elizabeth Warren tested the limitations of Facebook’s commitment to moderating political ads by running a blatantly false claim, highlighting the platform’s unwillingness to differentiate fact from fiction. In response to Facebook’s policy of non-moderation, Twitter came out notably banning all political ads. In a public statement, CEO Jack Dorsey claimed, “We believe political message reach should be earned, not bought.”

While Facebook refuses to fact-check political ads, smaller platforms such as Snapchat are committing to increasing their capacity to do so. While these companies prepare for a wave of paid promotions by political figures, an alternative economy of independent journalists, commentators and propagandists are subject to a different set of rules; some of these individuals will be gearing up to spread disinformation to sway public opinion using digital dirty tricks.

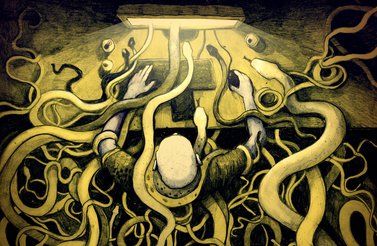

Content creators, accustomed to lenient content moderation, have turned on YouTube for its uneven application of new policy around monetization. The so-called “adpocalypse,” reactive moves by YouTube to control what constitutes “advertiser-friendly content,” has left creators aggravated because they cannot rely on a steady income from the platform. YouTube is still haunted by a very public controversy surrounding a string of disturbing videos masquerading as content suitable for children; in one case, YouTube’s content-serving algorithm seemed to lace together videos containing young children playing. A new wave of protection under the US Children’s Online Privacy Protection Act goes into effect this month — another example of policy written to grapple with the flawed way platforms operate, failing both consumers and creators. In the lead-up to this latest sweeping change, many creators are left asking what content is acceptable, and which uploads could lead to a permanent ban. While YouTube is responding to the legal imperative driving the decisions around children’s content, it appears to feel no such urgency in its approaches to politicized attacks on individuals.

Google, YouTube, Facebook and Twitter's small acts of self-regulation are not enough.

— CIGI (@CIGIonline) December 12, 2019

In 2020, they must take a global policy approach to hate speech and disinformation, says @BostonJoan 🔊 pic.twitter.com/NMm0b7DGQq

For example, YouTube has a serious problem with the targeted harassment of journalists, which a recent conflict between Carlos Maza, a journalist for Vox, and Steven Crowder, a comedian and conservative podcast host, exemplifies. In a number of videos, Crowder made racist and homophobic comments — many about Maza. In response, Maza decided to take a public stand. He shared a compilation of clips from Crowder’s daily YouTube videos that contradicted YouTube’s own signalling of diversity and LGBTQ pride. After releasing a series of flawed public statements, YouTube decided to temporarily suspend Crowder’s ability to monetize videos, and several more extreme channels were also caught up in this controversy. The highly publicized conflict between Maza and Crowder resulted in minimal action against Crowder; if anything, Crowder’s audience has expanded because of it. This episode follows the high-profile deplatforming of conspiracy theorist Alex Jones in 2018; it’s taken YouTube several years to begin to tackle an influence network of far-right propagandists masquerading as American conservatives.

To say the least, these new monetization policies were not applied equally, nor has YouTube’s policy against white supremacists and extremist content. While queer creators are subject to ongoing harassment by other users and content restriction by the platform itself, American neo-Nazi group Atomwaffen was still able to publish several videos featuring kill lists. This access is particularly troubling in light of a trend of white mass shooters livestreaming terrorist acts on Facebook and Twitch, with clips of the attacks being re-uploaded to YouTube at an alarming rate. Major platforms were able to act in unison against other terrorists, so why won’t they act against the growing tide of white extremists?

Many former YouTubers who were deplatformed for violating terms of service now use alternative streaming platforms; clips of their live shows are then circulated on YouTube anyway. Several prominent white nationalist content creators, such as Red Ice TV, remained on YouTube until recently. And despite Facebook’s ban on white supremacist content, Red Ice TV still streams on their platform. Continuous updates to YouTube’s and other platforms’ policies on objectionable and “borderline” content and promises to recommend “trusted sources” show that platform companies are finally aware of the phenomenon but remain unable to properly address the damage already done.

While there is still a great deal of debate as to what exactly constitutes a “far-right rabbit hole” among the increasingly extreme algorithmically suggested content online, it’s clear the core ideology of white supremacy is amplified by platforms’ products. And the impacts of this amplification are manifest in several high-profile violent terrorist attacks that took place in recent months in Christchurch, El Paso and Poway. We still see the networked harassment of journalists, women and LGBTQ folks when users evade platform policies by generating online outrage and exploiting a company’s desire for positive public image.

As we approach the US elections in 2020, more controversies will envelop these companies. The ambiguous enforcement of policies will continue until regulations are put in place to standardize compliance. For now, these companies will do the minimum required to avoid public controversies and keep stock prices stable. Regrettably, in the meantime, society will pay the price.