The libraries, archives and other institutions on which we rely to support the collection, preservation and dissemination of human knowledge are essential, if often overlooked, elements of our society. Indeed the systems, materials and workers (librarians, archivists, information technology professionals, data scientists, lawyers, and so forth) in these institutions can be considered the essential infrastructure that underpins all other research, both in Canada and globally.

With the rise in the use of artificial intelligence (AI), and the too-often rushed adoption or over-adoption of AI tools without proper appraisal of their effects, research institutions, and the precious human knowledge and records they hold, are put at risk. If we wish to preserve research institutions as repositories of human knowledge and spaces of exploration, we must think deeply about how AI might change them. And we need to develop clear governance to ensure that any changes preserve these institutions’ function and value rather than erode the quality, undermine the value or homogenize the diversity of knowledge they support.

We urgently need effective policy, both to protect the value and purpose of research institutions in society, and to lay a responsible pathway for the deployment of AI in the sector that balances safety with the spirit of innovation. Without comprehensive and widespread coordination, research institutions will have terms dictated to them by AI companies, rather than be collectively deciding what the future of research will look like.

Benefits

AI use in research offers many easily recognizable benefits: algorithmic search advancement; AI-enabled linked data and metadata analysis; the easing of certain difficult tasks, such as cataloguing materials; the use of optical and intelligent character recognition and other software to digitize and make material accessible online; and the ability to do complex bibliometric and bibliographic analyses to trace research connections across disciplines in ways humans cannot recognize.

Another benefit for some, and a serious risk for others, is the way in which AI deployment can reduce the need for library staff or replace previously paid student or permanent positions. Some administrators may see these effects as beneficial cost savings. Nevertheless, such cuts in the human workforce diminish the vibrancy and quality of research institutions. We must recognize and reckon with the fact that for many, the benefits of AI are reducible simply to the replacement of human labour. This is deeply worrying.

Risks

The risks of AI deployment in research are many, and often related to issues that are intangible or hard to quantify. Such risks include an overreliance on AI tools for tasks that previously built critical thinking in students and researchers; erosion of trust in information as a result of hallucinations or misrepresentations by generative AI and large language models (LLMs); and a loss of diversity of ideas through data bias.

More concrete risks include the potential for data corruption or loss; vulnerability of systems to exploitation, through prompt injection or data-poisoning attacks; predatory data-sharing agreements that limit institutions’ use of metadata in their own AI research, or that give third-party providers access to institutional data without fair compensation; and the undue influence and control over research that comes with anti-competitive monopoly dynamics in academic publishing and software licensing.

On the last point, the few companies developing AI tools for libraries and research systems (Elsevier and Clarivate, to name but two) are the same large, transnational companies that own most copyrighted academic material (in the form of books, journals and other essential materials), which they license to universities for high fees. A scenario in which both the material necessary for research and the tools through which that material is accessed and analyzed are owned by a few transnational companies would be a disaster for academic freedom and diversity of thought.

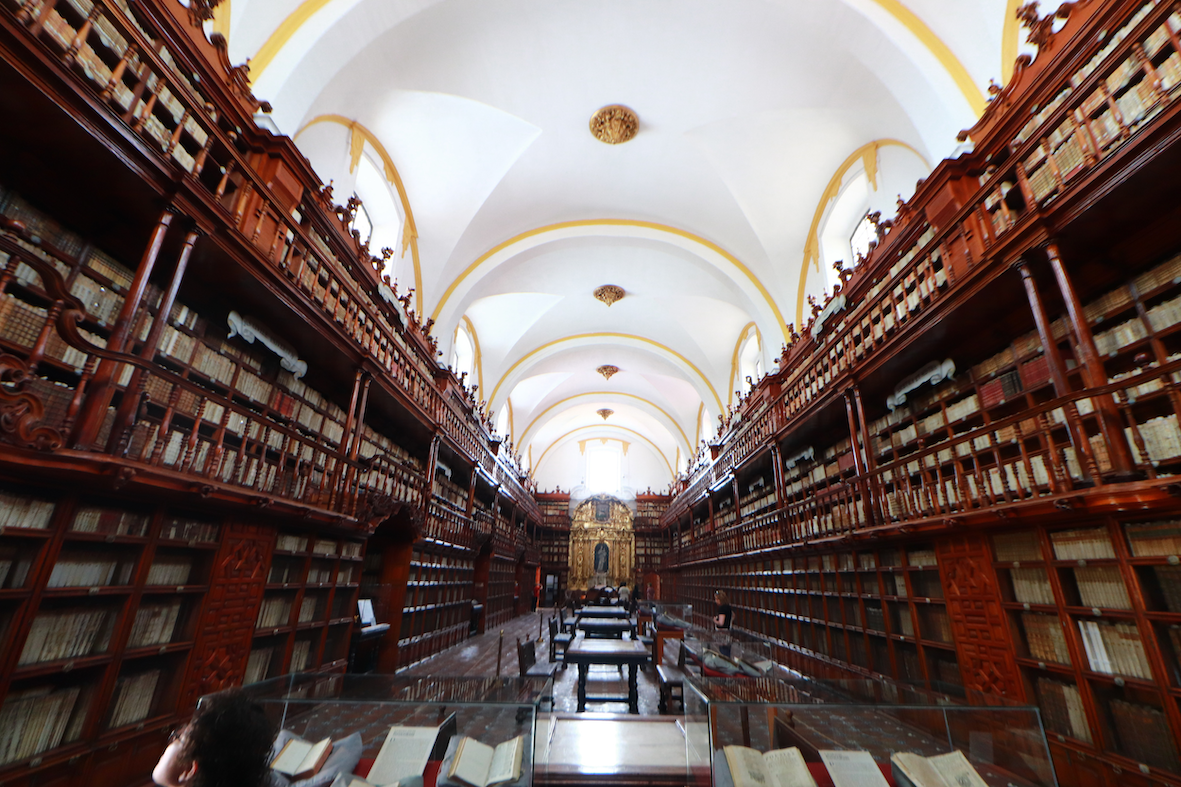

Organizing the past to make sense of it extends beyond historical narratives. It’s a central function of libraries, archives and other repositories of information and data.

What Are We Protecting?

Hayden White, the late and celebrated American philosopher, argued that written narratives of history organize disparate historical facts into something we can make sense of. The stories, the exact sequence of events or the lessons to be learned that we make out of historical fact are not always strictly true. But they provide a perspective on the factual records we have that are true; stories frame facts as a logical narrative from which we can derive meaning.

Organizing the past to make sense of it extends beyond historical narratives. It’s a central function of libraries, archives and other repositories of information and data. Where a single narrative shows us one perspective on a period or event, a library provides us with an endless array of narratives, sources and data from which to form countless combinations and perspectives.

In turn, how a library is ordered and structured suggests certain ways of using it. A researcher does not have to follow these paths, just as a reader may disagree with or reject a historian’s narrative, but a path is nevertheless laid out. The organization of information in a repository of knowledge has encoded in it certain assumptions about what research can or should be done there, the role of research in society, and who should be part of that process. Over the past several decades there have been extensive debates about how such institutions should orient themselves to be more inclusive of different perspectives to shed the organizational legacies of colonialism and prejudice that have shaped institutions, materials and methods. How we think about and organize our information and data, and how we structure researchers’ access to information, is a statement of what we wish research to do, who “we” includes, and the role research might play in shaping the future.

This is true not only of history and the humanities, but also of the sciences and social sciences. Here, data may point to “objective” truths or facts. But how we frame those facts, how it is catalogued and labelled, the kinds of questions we ask of it and the conclusions we draw are all rooted in choices and perspectives shaped by social and political context. That framing shapes the kind of narrative we choose to tell. How we structure access to, and preserve, information says a great deal about our assumptions about the role of research in society and how it can help transform society for the better. In turn, AI’s deployment in these irreplaceable institutions, how we allow it to structure and shape our access to and analysis of information will say a lot about what we want our future society to be like.

Finding a Path Forward

Consequently, if we wish to extensively integrate AI tools into libraries and research, we must first think deeply about, and build consensus around, the notion of what research should do, and how, and the basic principles we aim to promote and preserve. And that deep thinking needs to happen before AI tools are rolled out in research institutions.

These priorities should be determined collectively by researchers, librarians, archivists and all others engaged in research. Some clear areas that might be of particular importance, considering the historical role of research and the hard-fought efforts to reform and reframe it to be more representative, are:

- preservation of context;

- preservation of the integrity of material (digital and physical);

- sustainability, in terms of the longevity of systems and their impact on the world around them (socially and ecologically);

- accessibility, both in terms of promoting access and the rights of groups to limit access to their own information and data when needed — such as in the case of Indigenous communities asserting their data sovereignty, or researchers protecting sensitive or dual-use research;

- transparency in how AI is being used, and in how information and data are organized and for what purpose, with clear and democratic procedures for making changes to these processes;

- training for workers, researchers, administrators, educators and students on how to use AI tools, the associated risks, and the best practices for integrating these tools effectively into their work without compromising quality; and

- building a community of practice for AI development that includes but goes beyond engineers and computer scientists.

In addition, a governance structure for research would require, at minimum, guidance on data governance and licensing agreements with third parties (similar to the Canadian Research Knowledge Network model licensing agreement, for example) and enhanced privacy requirements that consider not only how personal data is collected and used but also the implications of those data being used in perpetuity to train a model, bearing in mind that a given AI tool may be used for countless future projects.

Standardization

In a context in which government has given no indication that AI governance in the research sector is a priority, and where research institutions are too diffuse to form a comprehensive sector-wide policy, one path forward might be through standardization.

The standards-development process is an often-technical tool used by companies and industries to create unifying guidelines for technology, governance and other key issues. Standards have been criticized for being industry-dominated and undemocratic or not consulting broadly enough. However, if viewed as a tool for bringing perspectives together to come up with a collective plan for governance, standardization could help bridge the divide between institutional, provincial, national and international policy. In fact, in a recent paper, Michel Girard argues that standards are inherently encoded with values that can serve as a basis for embedding norms in technologies and sectors from the outset. This takes the perceived weakness of standards and turns it into a strength, essentially creating a new tool kit for normative and values-based governance that can be truly democratic and serve as a basis for building resilient and safe technology ecosystems.

Currently, the Digital Governance Council is developing a Canadian national standard for AI use in research institutions (CAN/DGSI 128), which will serve to create a baseline of key principles for institutions to follow. This work could progress to an international standard through the International Organization for Standardization or another international organization. This standard may not be the ultimate solution. But it may present one path forward in what will no doubt be a long and complex process of learning to understand and co-exist with AI in research. The key is that we move forward in ways that advance, rather than hinder, the creation and preservation of knowledge.

Universities and other research institutions cannot wait for the government to create policies on their behalf, nor can they hope that the agreements and systems offered to them by third parties will necessarily be aligned with the purpose and spirit of public research.

Rather, they need to engage in a broad, in-depth and rigorous process of exploring how research should look in the future and the values on which it will be based, and only then on how AI tools might help to make that future a reality.