Not humans, but algorithms piloting advanced fighters? It’s not science fiction, but rather recent history. In February 2023, Lockheed Martin Aeronautics announced that artificial intelligence (AI) software had flown a modified F-16 fighter jet for 17 hours in December 2022. No human was aboard during this flight, the first in which AI had been used on a tactical aircraft.

The test reflects the interest that several countries have in developing sixth-generation fighter jets in which AI algorithms are in control. Combined with recent dizzying releases of AI-enabled technologies, such developments have raised a red flag for those on the world stage who demand that human control over weapons systems be maintained and regulated. Without immediate action, the opportunity to regulate AI-assisted weapons may soon vanish.

The Role of AI

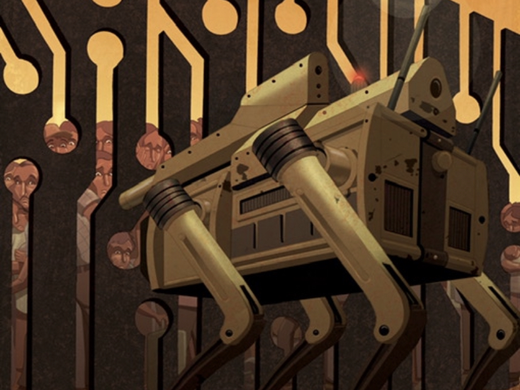

AI is at the heart of the growing autonomy of certain weapons systems. Some functions of key concern, such as target selection and engagement, could soon be shaped by the incorporation of AI. Battlefield use of AI is clearly visible in the war in Ukraine. The range of applications includes targeting assistance and advanced loitering munitions. And Ukraine is not the first example of such battlefield deployment. The Turkish-made Kargu-2 loitering munition, with AI-enabled facial recognition and other capabilities, has drawn much attention for its use in the war in Libya.

With AI dominance now seen as central to the competition among the world’s great military powers, the United States and China in particular, investment in military AI is certain to grow. In 2021, the United States was known to have approximately 685 ongoing AI projects, including some tied to major weapons. The budget for fiscal year 2024 released by President Joe Biden in March of 2023 included spending of $1.8 billion for AI development. China’s investments in military AI are not publicly available but experts believe Beijing has committed tens of billions of dollars.

The decade-long discussion on lethal autonomous weapons at the United Nations Convention on Certain Conventional Weapons (CCW) has been slow-moving. But it has served as an incubator for an international process that aims to create and enforce the regulation of such weapons. At the beginning of the discussions, some states, for example, the United Kingdom, argued that autonomous weapons might never exist, as states would not develop them. That argument can no longer stand.

Beyond the CCW

The policy community beyond the CCW is paying attention. Some analysts have expressed concern about the ways in which AI could further diminish human control. Paul Scharre, vice president and director of studies at the Center for a New American Security, points out that “militaries are adding into their warfighting functions an information processing system that can think in ways that are quite alien to human intelligence.” Systems that do not have clear human control or where military commanders do not have sufficient understanding of actions the system could take require specific prohibitions. Scharre cautions that the risk is that eventually humans could lose control of the battlefield.

This concern is also shared by states. New national policy documents that parallel CCW discussions include the Political Declaration on Responsible Military Use of Artificial Intelligence and Autonomy released by the United States and the Costa Rica–led Belén Communiqué on autonomous weapons. Both were released in February 2023. The US declaration stresses voluntary measures and best practices — an approach long favoured by Washington and its allies. The Costa Rica–led effort calls for a legally binding instrument with both prohibitions and regulations on autonomous weapons. This so-called two-tiered approach recommends specific prohibitions on weapons that function beyond meaningful human control, and the regulation of other systems that may have sufficient human control but are, for example, deemed high risk to civilians or civilian infrastructure.

The two-tiered approach was prominent in discussions at the first meeting of the 2023 Group of Governmental Experts at the CCW held from March 6 to 10, 2023, in Geneva. However, even though it appeared that most states support a two-tiered approach, only a handful of 80 or so states that usually participate are strongly opposed; it was also clear there are divergent views about what such an approach will mean.

For example, France proposed that the prohibition focus on what it understands to be fully autonomous weapons systems. In the French view, weapons that are fully autonomous function with no human control and also outside the military chain of command. As civil society organizations such as Article 36 and Reaching Critical Will have noted, this definition is unhelpful and unrealistic; no state would develop systems that are outside its chain of command. Placing the bar for prohibition at such a level essentially means no prohibitions.

A submission from a group led by the United States and including Australia, Canada, Japan, South Korea and the United Kingdom outlined the prohibition of weapons that by their nature cannot be used in compliance with international humanitarian law (IHL). Still, that group did not see the necessity for a legally binding instrument and instead focused on existing IHL principles.

Russia, Israel and India were most vocal about their opposition of any legally binding instrument emerging out of the CCW process.

The CCW Going Forward

So far, CCW meetings have tended to be insulated from actual technological advancements. Generally, the more militarily powerful states, such as Russia and the United States, have sought to either stall discussions, in the case of the former, or propose voluntary measures to guide the development of autonomous weapons, in the case of the latter. Few of these states with more advanced militaries have seriously engaged or explained their own use of new technologies during years of CCW meetings. The United States has discussed some decades-old systems but not any more recent technologies.

The next CCW meeting in Geneva is scheduled for May 15–19, 2023. Because of the many recent media reports on AI (such as ChatGPT), expert opinion and the tools that the diplomats themselves may be using, such as AI-assisted writing software, this meeting will present an opportunity to discuss concrete examples of the use of AI by militaries. Certainly, advocates for regulation will be expecting and calling for such discussion. For example, the United States could explain in more detail how its air force used AI to identify targets in a live operational “kill chain” process that involves detection and firing on targets. How, exactly, were target selection and engagement aided by AI?

China, which has played a balancing role in the CCW, seemingly advocating for regulation while also wanting to keep its options open, could say specifically what limitations it would see placed on AI-driven weapons. Chinese diplomats have long urged support for a prohibition on offensive uses of autonomous weapons, while allowing defensive uses. This is a meaningless distinction in a world in which the line between defensive and offensive weapons is impossible to define.

The message the attendees at this meeting most need to express, and amplify? The global community urgently needs a new regulatory framework that places constraints on the development of any weapons that further diminish human agency over the use of force. But we can’t wait much longer. Left unchecked, the marriage of AI with the world’s most sophisticated weapons could be catastrophic.